Hiring a software tool: A simple mental model

I’ve been choosing a lot of software lately.

Asana. Toggl. HubSpot. LinkedIn Premium options. A couple of smaller utilities. Some I’ve kept, some I’ve dropped, and a few I’m still thinking about…

And it reminded me of an analogy I use with clients all the time:

Picking a new software tool is a lot like hiring a new employee.

Not in a cheesy, “tools are people too” way but in a practical decision-making way. It’s a mindset that helps you slow down, ask better questions, and avoid the two classic mistakes:

buying something because the demo was slick, and

sticking with something long after it stopped earning its keep.

This post is a framework you can come back to, whether you’re selecting software for a company, or just choosing a personal app you’re going to trust with your time, attention, money, or data.

TLDR (because this is a long one)

Treat new tools like new hires.

Write the job description. Interview properly. Run a probation period. Onboard deliberately. Do performance reviews. Allow for failure.

The Model

Imagine you’re hiring a new person onto your team. You (hopefully) wouldn’t do this:

see a charismatic candidate on YouTube

glance at a few bullet points on their CV

sign a contract

then hope it all works out

But basically that’s how many tool or app purchases happen.

Instead, try to think like this:

“If this tool was a new hire, what job would we be hiring it to do? And how would we manage it when it starts?”

1) The Job Description: what job are you actually hiring for?

Before you compare features, write the job post.

This doesn’t need to be a novel. Bullet points are fine. It just needs to give enough clarity to avoid hiring someone you don’t need.

A good app job description usually covers:

What outcomes do I need or what’s my ultimate goal?

It’s not “have a CRM”, but rather “I want to track leads from first contact to paid work”.

What specific tasks will it own vs support?

Asana, Monday.com, or Jira don’t do the work. They support project planning, co-ordinate teams, and track tasks. That’s fine but this needs to be in black and white.

What does ‘good’ look like after 30/60/90 days or what are my success factors?

If you can’t describe success, drift will creep in.

Where does it need to fit in my ecosystem?

Most tools don’t fail because they’re bad tools. They fail because they don’t fit how you operate in your day-to-day.

(If you’re not looking for a business tool… the job description still applies to a habit tracker, a notes app, or a budgeting tool. Your ‘team’ is your future self.)

2) The Interview: Don’t just watch the demo - ask the awkward questions

Software demos are like interviews where the candidate only talks about their strengths. Sofware providers put in a lot of effort to make the demos look amazing but you need to see the other side of the interview.

Here are a few questions I like (for organisations and personal use):

What will this tool make harder or easier?

Good tools usually have trade-offs e.g. few integration options, customisations that aren’t quite right, or a workflow that doesn’t exactly mirror your day-to-day. You may need to choose which trade-off you can live with.

What will annoy me using this tool?

Every tool has a tax. Find it as soon as you can.

What does ‘bad usage’ look like?

For example: HubSpot can become a dumping ground. Asana can become a graveyard. Toggl can become… something you stop doing after two weeks. Think about what happens when data goes stale or when the team lose confidence in it.

How will I get help when I’m stuck?

Documentation, support, community, training, YouTube, templates; these matter more than one extra feature.

If you’re buying for a team, also ask:

Who on my team will hate this tool, and why?

That’s not negativity. That’s just the reality of implementation.

3) The Probation Period: Put the software through its paces before you commit

This is the big one!

Don’t rush the transaction. Test it properly.

A probation period (or even a free trial) should not just be “click around for an afternoon”. It’s about using the tool in a real scenario with real stakes (small stakes, ideally).

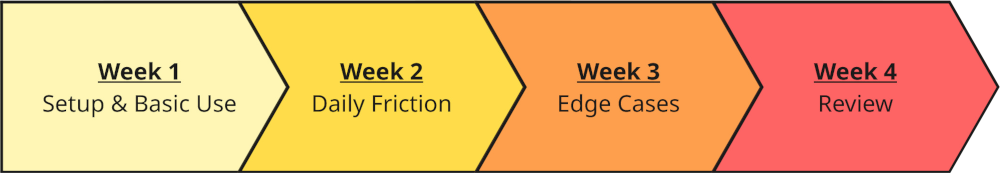

A simple probation plan:

First - Pick one real workflow you actually do on a daily or regular basis.

Then test:

Week 1: Setup & basic use

Can I get to first value quickly? Bonus points for tracking the time you actually spend on the task and comparing to before you starting using the tool.

Week 2: Daily friction

Does it still feel good on day 10, not just day 1?

Week 3: Edge cases

What happens when something changes, breaks, or needs a workaround?

Week 4: Review

Did this tool actually reduce effort or add effort?

(Personal version: If you’re trying a app, run a 2–4 week trial where you use it like you intend to use it long term.)

4) Onboarding & training: your new hire needs support

I’ve often been left twiddling my thumbs for days, weeks, or months after starting a new job. Don’t let that happen when it comes to a new piece of software!

A tool doesn’t “just work” because it has features. It works because people build habits around it.

So budget for:

a setup period

training time

a few mistakes

some frustration

and a bit of iteration

For companies, this is where tool rollouts go wrong. Leadership often buys a tool then nobody owns or controls the adoption of it. The tool becomes ‘shelfware’ and everyone quietly returns to spreadsheets.

For individuals, the equivalent is: you download an app, use it for three days, then forget it exists.

Tools are habit-forming devices - treat onboarding as part of the purchase.

5) Performance appraisals: is this tool still earning its keep?

This is the part people rarely do and it’s arguably the most valuable idea in this whole analogy.

A tool you chose two years ago might not fit your world today, so give it a performance review.

Here’s a lightweight ‘tool appraisal’:

Ask yourself:

Is it still doing the job we hired it for?

Or are we using it out of habit…

What benefits are we actually getting?

Time saved? Fewer errors? Better visibility? Less stress?

What’s the cost now?

Not just the money but also friction, admin, duplication, and context switching.

Have our needs changed?

If your business model or goals changed, your toolset may need to as well.

What would replacing it cost?

Another important question. Sometimes “good enough for now” is a fine answer. Sometimes you end up paying an ongoing technology tax.

I probably do this review a little too often myself - often daily or weekly!

But a good rule of thumb would be doing this quarterly for business tools, and a couple of times a year for personal ones.

6) Allow for failures

Some hires don’t work out. Some tools won’t either.

That doesn’t mean you failed. It just means you ran an experiment and learned something. I’ve been through this myself a number of times.

To make things easier:

keep exports/backups in mind - try to ensure you can dump out your data when the time comes

avoid over-customising too early - this means more rework in future

document ‘how we use this tool’ as you go - knowledge management is a whole other topic but reference documentation for software is invaluable, especially when it’s first brought in to a company

and don’t build a fragile process that only works if the tool behaves perfectly, or if one key resource isn’t on annual leave - allow for fallbacks, shared knowledge, and workarounds

Want help with this?

If you’re choosing software right now - whether it’s a CRM, project management, automation, reporting, or a whole messy ecosystem — this is exactly the kind of work I do with clients. I work to make sense of their need needs, reduce risk, and help them choose the tools that genuinely fit.

If you’d like a hand, you can book a call here: Scheduling an intro